BY MATTEO LUCCIO / CONTRIBUTOR / PALE BLUE DOT LLC PORTLAND, ORE. / WWW.PALEBLUEDOTLLC.COM

Once Mass deployed, self-driving cars promise to reduce dramatically the number of traffic fatalities, because they will never be drunk, sleepy, distracted, or aggressive—nor will they engage in such irresponsible human driving behaviors as going through red lights, texting, or playing chicken with bicyclists. They also promise to optimize routes so as to reduce traffic congestion and fuel consumption, as well as reduce the number of vehicles on the road as more people switch to using car services rather than owning their own vehicle. Lidar will be a key to making all of this possible.

All the major car manufacturers are developing self-driving cars, as are the driving services Uber and Lyft. In March 2016, GM acquired Cruise Automation, a 40-person, three-year-old software company that had been testing vehicles on the streets of San Francisco. Cruise Automation, along with Google, is among the few companies with permits from the state of California to test the cars. In January 2016, at the Consumer Electronics Show, GM announced that it was making a $500 million investment in Lyft in exchange for a 10 percent stake and an agreement to build a network of self-driving cars together.

In August 2016, Uber announced that it was acquiring Otto, a startup that has been working on the goal of turning commercial trucks into self-driving freight haulers by developing hardware kits for existing truck models. The news came as Uber launched a public pilot program to deploy autonomous vehicles (with minders sitting in the front seat) to pick up passengers in Pittsburgh, Pennsylvania. Uber has been rapidly expanding its self-driving division, opening an Advanced Technologies Center in Pittsburgh staffed by 50 robotics engineers recruited from Carnegie Mellon University’s self-driving lab, as well as researchers from a number of other companies. Uber says that it will work on self-driving technology across three sectors: personal transportation, delivery, and trucking, leveraging both its ongoing robotics research and the data it collects by drivers driving 1.2 billion miles every month.

AUTONOMOUS VS. DRIVERLESS

Technology for autonomous vehicles builds on car navigation systems that have been commonplace for years and new collision-avoidance systems that are beginning to appear in production vehicles. User-operated, fully autonomous cars—which can deal with the full spectrum of road and traffic scenarios without driver assistance—will probably come to market within the next five years. Mass market adoption of driverless cars, on the other hand, will take much longer, due to social, legal, and insurance issues.

None of the systems on the road today are truly driverless, says John Eggert, Director of Sales & Marketing for Automotive Business at lidar manufacturer Velodyne. “Even in the Tesla system, the driver has to pay attention at all times and has to take control of the vehicle with very little warning,” he points out. In a fully autonomous vehicle, by contrast, drivers could read a book or sleep and have plenty of time to take back control of the vehicle. “To accomplish that is a much more difficult task than anything that is on the road today. Our sensor is relevant any time the driver does not have to pay attention to the road.”

MAPPING DATA

A fully developed system will require a broad mix of geospatial technologies:

1. devices already being used for car navigation: GPS receivers and cellular transceivers to exchange real-time data on traffic and road conditions, work zones, and detours;

2. devices currently being tested for collision avoidance: cameras, lidar, and radar;

3. inertial measurement units (IMUs) and wheel encoders, to compensate for gaps and degradation in GPS signals; on-board computers to “learn” from these data;

4. hard drives to store the data for use the next time a vehicles drives the same stretch of road;

5. a communication network through which all the vehicles in an area can alert each other in real time of new challenges—such as an accident or a downed tree.

Autonomous vehicles will also require realtime data on traffic, road conditions, obstructions, hazards, construction, and weather. Much of that information is already available to drivers through mobile applications.

Finally, autonomous vehicles will require detailed mapping data. A key open question is to what extent that data will be collected in advance and, if so, by whom—and to what extent the vehicles will map the roads and road conditions in real time. “This whole autonomous vehicle market is in a fledgling state,” says Mark Romano, Geospatial Product Manager at Harris Corporation, another lidar manufacturer.

“All the companies that have shown the greatest capability of driving with the least amount of intervention by the driver have used high-definition lidar as the primary sensor on board.”– JOHN EGGERT, VELODYNE

Mapping most roads with sufficient accuracy and frequency to support fully autonomous vehicles is a massive undertaking, requiring data from mobile terrestrial scanners as well as from aerial lidar and high-definition mapping sensors. “The jury is still out on whether this is going to become a virtual mapping process from the vehicle or are we going to pre-build what I call ‘the navigation maps of the future’ into vehicles, which will have more of a collision-avoidance role,” says Romano. “The problem is that no one has yet found a way to scale this process—mapping, essentially, all the roads of the United States and the world.” There is some movement afoot toward setting up consortia to standardize these future navigation maps, he adds, “but we are not there yet.”

We do not yet have a 3D “tunnel” of data that vehicles can use to drive down a road and fully understand their environment, Romano points out. Some vehicle manufacturers have installed large commercial lidar systems on the roofs of their test vehicles, which does not conform to the public’s idea of a car. Ideally, these systems will eventually be small enough to be integrated into the body of a vehicle.

RESOLUTION AND RANGE

Velodyne entered a self-driving vehicle in the first DARPA challenge, in 2004, Eggert recalls. “No one finished, but we went the third farthest distance of all of the participants. At that time, we were using strictly vision. Out of that challenge, our CEO, David Hall, realized that a much greater capability was necessary on the perception side of these vehicles and that is why we developed the full 3D, high-definition lidar for self-driving cars.”

Contrary to many media reports, he argues, the biggest challenges right now are not making lidar sensors smaller and cheaper. “Those are ideals, but the goal right now is to gather as much information as possible about the environment around you. The Velodyne sensor is very rich in data. It provides a CAD model of the environment around the vehicle. Our customers want higher resolution and longer range.” Additionally, he points out, the sensors must be automotive grade.

“I don’t think that anyone is seriously thinking that lidar is the only sensor that needs to be on board, or that cameras or radar are,” Eggert says. “All three of them are needed, of course. Then, on top of that, another critical component is high-definition maps—which, by the way, are made possible by lidar and their use is also made possible by high-definition lidar. However, all the companies that have shown the greatest capability of driving with the least amount of intervention by the driver have used high-definition lidar as the primary sensor on board.”

Velodyne is working with automakers Ford, Volkswagen, and Volvo, and with service providers Baidu, Navya, and ZMP. Ford and Baidu invested $75M each in Velodyne, providing them a minority share in the company. However, according to Velodyne, the agreement precludes any preferential treatment as far as receiving product early or at a discount. “Baidu has made it very clear that they have very high aspirations for full autonomy by 2021 or sooner,” says Eggert. “They have invested in us because they see lidar as a key technology to achieve that.”

At the Consumer Electronics Show (CES) in Las Vegas, in January 2017, Velodyne gave limited demon- strations to key customers of its ULTRA Puck VLP-32A high-definition sensor. “It demonstrates double the stan- dard range of our current products,” Eggert says, “and also has the resolution of our larger HDL-64E sensor, which you see today on autonomous vehicles that are driving around town. We have actually taken that larger sensor and reduced the weight from about 10 kg to less than 1 kg and the size to one tenth.” See Figure 1.

FIGURE 1. The ULTRA Puck VLP-32A is able to see all things all the time to provide real-time 3D data to enable autonomous vehicles, with Velodyne’s patented 360° surround view.

FIGURE 1. The ULTRA Puck VLP-32A is able to see all things all the time to provide real-time 3D data to enable autonomous vehicles, with Velodyne’s patented 360° surround view.

SPATIAL ANALYTICS

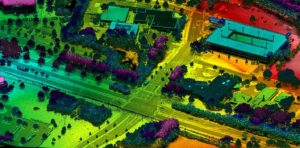

Esri, in partnership with Microsoft, HERE, and other companies, is working on the concept of a “connected car,” which both collects and receives data about road and traffic conditions (Figure 2). Esri’s focus is on analyzing that data. “We can do the real-time data streaming, ingest that data, detect events on the road, such as icy patches, then project out: if there is ice here and other road segments have similar characteristics, then we can project that there might be icy patches along the route that the car is traveling,” says Frits van der Schaaf, an Esri Business Development Manager. Perhaps, he suggests, if some conditions for autonomous driving are not met, the system would turn control back to the driver.

FIGURE 2.

Esri has partnered with Microsoft for this Connected Car consortium, which also includes Microsoft partners Swiss Re, Cubic Telecom, NXP, and IAV Automotive Engineering.

While autonomous driving relies primarily on the sensors on the car, van der Schaaf points out, the map provides a look around the corner at what the sensors cannot see. Esri’s role, he says, is in enriching the car’s “geographic context.” For example, near schools or in areas where many elderly people live, the system can advise the connected car or its driver to slow down and be more alert.

Esri also plays a role in facilitating the exchange of data between cars and public agencies, such as cities and departments of transportation. At CES, Esri demonstrated a scenario in which several cars reported hitting the same pothole; the city therefore assumed that the report was correct and dispatched nearby crews to repair it, knowing not only the pothole’s location but also how to prioritize the repair. Smart cities, he points out, also know the density and speed of traffic, because connected cars provide that information to the cloud, with traffic lights acting as local information hubs.

In a scenario demonstrated in a large parking lot at CES, a person without insurance who wanted to lease a car first had to accept an insurance program calculated on the fly based on his or her destination. The car offered the driver multiple routes, such as the shortest one, which however cut through an area with higher accident rates, and an alternative one, which carried a somewhat lower insurance premium. Many layers on Esri’s platform have information that go into the insurer’s risk assessment, van der Schaaf says. “The insurer that worked with us on this was Swiss Re and they were part of this showcase as well,” he says. In this scenario, the car does some autonomous driving and suggests stops along the way based on the user’s profile, such as for grocery shopping or to pick up the dry cleaning. “We provide the geographic context based on the user’s profile to make the experience not only safer but also more convenient,” van der Schaaf says.

“We put a lot of effort into performing spatial analytics and connecting to Microsoft’s Azure stack, which also contains HERE and TomTom as content providers,” van der Schaaf says. “Microsoft has developed an IOT capability that can ingest millions of records per second and analyze them. We have a product called GeoEvent Server that fits in that architecture and works with the Azure IOT components to do that high ingestion and on-the-fly spatial analytics on that data that comes in.”

Much of Esri’s data for this project comes through open data initiatives in its Living Atlas, such as analysis of traffic and accident-prone areas. Esri also uses data, such as driving behavior, that car manufacturers collect to help design new cars. “Land Rover is using that data to figure out how people are driving on roads in the Middle East vs. in Europe vs. in the United States,”van der Schaaf says. “What are the characteristics of the driving behavior based on temperature, road surface, terrain, slope, and so on? Analytics on that feed back into the car’s maintenance and design.”

MANUFACTURERS

High-end car manufacturers—including BMW, Mercedes, and Tesla—have already released cars with self-driving features or will do so soon, while Google has been experimenting with a fleet of autonomous vehicles. Ultimately, effective use of lidar for autonomous vehicle guidance and navigation requires cooperation on data and software standards between lidar manufacturers, vehicle manufacturers, and system integrators. On the hardware side, Romano says, “the problem with this industry is that it is not known whether the end users are willing to collaborate on the guidance or mapping or navigation system.” Commercial competition among lidar manufacturers and vehicle manufacturers will greatly limit their willingness to cooperate, he predicts.

“I would hope that we do see some standardization of what that system looks like,” says Romano. However, he points out, Tesla, Uber, and Google are currently pursuing very different approaches to the problem. “Some are thinking in terms of having a pre-loaded set of navigation data; others are thinking that the cars will do all of the work for them, with integrated systems.” In the long-term, the latter is the preferred way, he argues, but the hardware is not there yet. “We are some years away from seeing fully integrated vehicle mounted systems that are going to be at scale.”

THE ROLE OF REGULATION

Federal and state regulators will also play a crucial role in the advent of autonomous vehicles, because they will ultimately have to approve them for use. Presumably, Congress and federal regulators—especially the National Highway Traffic Safety Administration—will set minimum safety standards, while states will be free to impose more stringent requirements. Romano likens the regulatory future for autonomous vehicles to the current one for UAS (unmanned aerial systems): “Just like they are going to test the airspace slowly for UAS, they are also going to test transportation slowly.”

At first, use might be limited to the federal interstate highway system. Then regulators might certify that certain roads, starting with state highways and major arterials, have been mapped to a sufficient level for autonomous vehicles to use. “There are companies that are taking that stopgap approach right now,” says Romano. “They would like to map in sufficient 3D detail major highway infrastructure, at either the federal or state level, but not down to the county or city/township level.” That is a stopgap approach, he explains, because “eventually device size and system architectures should lead to the vehicles themselves actually being able not only to map but also to update and maintain change.” Both hardware and software companies are working on this.

Meanwhile, Romano points out, most of today’s vehicle-mounted systems are more about collision avoidance than they are about mapping. Harris, he says, is “actively pursuing building system maps for navigation systems,” utilizing Geiger mode lidar as a data source. “We can do very high accuracy, very detailed mapping of transportation features at scale. We can collect very, very large areas at very high resolution with Geiger-mode lidar and answer part of the problem set for a true 3D navigation system database.”

He sees that as a stopgap, however. “I don’t think, long term, that all navigation systems can be pre-built, because roads change, construction happens, accidents happen. It is not a real-time system.” However, it would be a start because today, he points out, “we don’t even have a nationwide basemap of sufficient detail, even at the federal highway level.” See Figures 3-4.

CONCLUSIONS

The road ahead for driverless cars is still full of obstacles. However, a mix of geospatial technologies will eventually enable manufacturers to navigate around these obstacles, with great social benefits. In this mix, lidar stands out as an indispensable component.

FIGURE 3. This example of Geiger-mode lidar from Harris shows an intersection in Raleigh, North Carolina, which helps clients analyze critical transportation infrastructure.

FIGURE 3. This example of Geiger-mode lidar from Harris shows an intersection in Raleigh, North Carolina, which helps clients analyze critical transportation infrastructure.

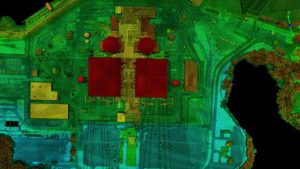

FIGURE 4. Used for utility asset mapping and management, this Geiger-mode lidar image from Harris Corporation is of a power generation station near Charlotte, North Carolina.

FIGURE 4. Used for utility asset mapping and management, this Geiger-mode lidar image from Harris Corporation is of a power generation station near Charlotte, North Carolina.

An Autopilot Fatality: Tesla Model S

On May 7, 2016, on U.S. Highway 27 in northern Florida, Joshua Brown was killed when his Tesla Model S, traveling at 74 miles per hour, ploughed under the trailer of an 18-wheel truck that had just made a left turn across the divided highway, heading for a side road.

Brown’s was one of about 90 traffic fatalities on an average day in the United States. His death, however, made headlines because he had engaged his Tesla’s autopilot mode. According to Tesla’s account of the crash, the car’s sensor system failed to distinguish the white truck and trailer against a bright spring sky and did not automatically activate the brakes. Brown did not take control and brake, either. In addition to its cameras, the Model S has radar sensors that could have spotted the trailer. However, Tesla CEO Elon Musk wrote on Twitter that the radar “tunes out what looks like an overhead road sign to avoid false braking events.” Tesla noted that the fatality was the first in just over 130 million miles where its autopilot was activated.

Tesla’s autopilot is still in development but is deemed good enough to be tried experimentally by many users. The manufacturer emphasizes that its Model S disables the autopilot by default and requires drivers to explicitly acknowledge that it is new technology and still in a public beta phase before they can enable it. When they do, the acknowledgment box explains that the technology “is an assist feature that requires you to keep your hands on the steering wheel at all times” and that “you need to maintain control and responsibility for your vehicle” while using it. In addition, every time a driver enables the autopilot, the car reminds the driver to always hold the wheel and be prepared to take over at any time. If it does not detect the drivers’ hands on the wheel, it gradually slows the car until it detects them again.

Brown, 40, who owned a technology company called Nexu Innovation in Canton, Ohio, was a Tesla enthusiast. One of several videos that he posted on YouTube of his car on autopilot showed it avoiding a crash on the highway and the footage racked up one million views after Musk tweeted it.